2023 Project

In GoldSmiths, University of London.

An outdoor mushroom identification and AR experience application. & An AR tool transforms human heads into potatoes.

Mushroom Picking is an outdoor mushroom identification and AR experience application created using the Lens Studio tool on the Snapchat platform. Users can scan and analyze specific types of mushrooms using the Snapchat lens, interact with their corresponding virtual AR figures, and experience the effects that consuming these mushrooms might produce.

When foraging for mushrooms, how can you distinguish between poisonous and edible mushrooms, have a playful experience, and learn the effects of consuming mushrooms?

Unpleasant

Hallucinogenic

Poisonous

The concept for this project was inspired by three key factors.

Firstly, mushrooms are widely distributed across the UK and hold a significant place in its culture, with mushroom foraging being a popular outdoor activity.

Secondly, due to the toxicity and hallucinogenic properties of some mushrooms, attempting to consume them can be risky, yet there is a curiosity and interest in the hallucinatory experience they offer.

Lastly, many botanical gardens and forest parks in the UK, for conservation reasons, do not allow the direct picking or removal of mushrooms, making virtual foraging an appealing alternative.

Our team conducted field research in London’s Kew Royal Garden to support this project concept.

We found that the city is home to a diverse range of mushrooms, but without expert knowledge, there are potential risks due to unknown toxicity. The park also prohibits removing plants and fungi. We also identified a unique mushroom species, the Devil’s Fingers, as an ideal candidate for inclusion in the app.

The app is designed for outdoor activities like mushroom foraging.

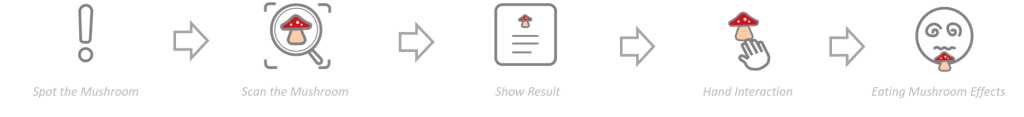

Users need to find a specific type of mushroom outdoors, then scan it with the app’s rear camera to identify its type and gather basic information. After learning the mushroom’s type, a virtual image of the mushroom is displayed for users to ‘pick’ via gesture recognition. Finally, by clicking the “Try Eating” button, users can experience the effects of consuming the specific mushroom through the AR interface.

The technology in this project mainly involves training machine learning models, gesture recognition, Lens effect, and model animation, among other aspects.

Since this project utilizes existing image recognition models in TensorFlow for training, it does not require a large training set. Therefore, we have sourced approximately 150 images of each type of mushroom from the internet for model training.

The gesture recognition and modeling animation parts are completed by other team members, and are not detailed here.

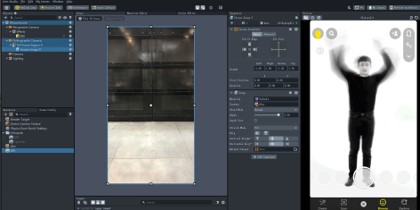

Regarding the Lens effect, to demonstrate the special effects of hallucinatory dizziness and black-and-white death filters, I have adjusted and edited some existing lens templates. This allows users to see the real environment through the camera with special effects applied, simulating the visual effects experienced when consuming mushrooms.

Hallucination effect – Fly Agarics

Death effect – Devil’s Fingers

Depth Materials – displacement component parameters – Color Correction

Camera image blur component + BW Camera effect

This project has achieved its initial goals to a certain extent, providing users with a complete process from mushroom identification to interaction, and then to the experience of consumption effects. It uses virtual means to address the challenges of mushroom foraging and tasting in the real world, effectively leveraging AR technology features.

However, since the project is based on Snapchat, the file size is limited, which restricts the amount of content and gameplay. Moreover, it cannot be launched as an independent app, and the experience of scanning with a smartphone is not as ideal as expected.

In the future, independently developing through Unity and porting to AR devices would be a valuable direction for upgrades.

In addition to the group project, I independently created a simple Lens based on a unique concept: alleviating the nervousness and anxiety some people experience during public speaking, especially when faced with the expressions of audience members or judges. It’s often suggested that speakers imagine the audience’s heads as potatoes to ease their nerves.

My project actualizes this advice through AR, transforming the audience’s heads into potatoes to visually reduce the speaker’s stress.

This project was developed using Lens Studio and released on the Snapchat platform. Designed as a straightforward Snapchat AR filter, the ‘Potato Filter’ allows users to scan other people with their phone, turning their heads into 3D modeled, stylized potatoes.

This filter is capable of replacing up to 10 people’s heads at once. While considering Snapchat’s practical application limitations, such as partial non-recognition or jitter, it’s still possible to achieve the effect of multiple heads turning into potatoes on the same screen.

For instance, replacing the heads of talk show hosts and guests with potatoes would be quite amusing. This whimsical yet practical application not only adds a touch of humor but also serves a therapeutic purpose for public speakers, making it a unique and engaging addition to the AR experience landscape.

Scan the QR code